Maybe in some old versions of docker environment, when using Docker compose to start the container, you will encounter the following error:

Error response from daemon: could not find an available, non-overlapping IPv4 address pool among the defaults to assign to the network

This is actually a symptom of running out of default-address-pools. In these environments, docker-compose will use the private network of Class B by default.

The private IP of segment 172 has a range, from 172.16.0.0 to 172.31.255.255.

That is to say, when we start a docker-compose project, we will eat a private segment of Class B, which is very heroic.

A network segment of class B can have 65,534 hosts, which is equivalent to having 65,534 services.

You can use the command below to check which subnets your network has used.

docker inspect $(docker network ls|tail -n+2|awk '{print $1}') -f "{{.IPAM}}"|sortTake my MacOS as an example, the result is as follows: (Because deviny/phpenv can use the function of ./all start, so I deliberately built a bunch of env files to simulate)

docker inspect $(docker network ls|tail -n+2|awk '{print $1}') -f "{{.IPAM}}"|sort

{default map[] []}

{default map[] []}

{default map[] [{10.99.0.0/24 map[]}]}

{default map[] [{172.17.1.0/24 172.17.1.1 map[]}]}

{default map[] [{172.18.0.0/16 172.18.0.1 map[]}]}

{default map[] [{172.19.0.0/16 172.19.0.1 map[]}]}

{default map[] [{172.20.0.0/16 172.20.0.1 map[]}]}

{default map[] [{172.21.0.0/16 172.21.0.1 map[]}]}

{default map[] [{172.22.0.0/16 172.22.0.1 map[]}]}

{default map[] [{192.168.112.0/20 192.168.112.1 map[]}]}

{default map[] [{192.168.128.0/20 192.168.128.1 map[]}]}

{default map[] [{192.168.144.0/20 192.168.144.1 map[]}]}

{default map[] [{192.168.160.0/20 192.168.160.1 map[]}]}

{default map[] [{192.168.176.0/20 192.168.176.1 map[]}]}

{default map[] [{192.168.192.0/20 192.168.192.1 map[]}]}

{default map[] [{192.168.208.0/20 192.168.208.1 map[]}]}

{default map[] [{192.168.224.0/20 192.168.224.1 map[]}]}

{default map[] [{192.168.240.0/20 192.168.240.1 map[]}]}

Every time a docker-compose is started, it will automatically run out of a subnet segment, but it will not be full on my MacOS, and it will automatically switch to other network segments, such as segment 192😆.

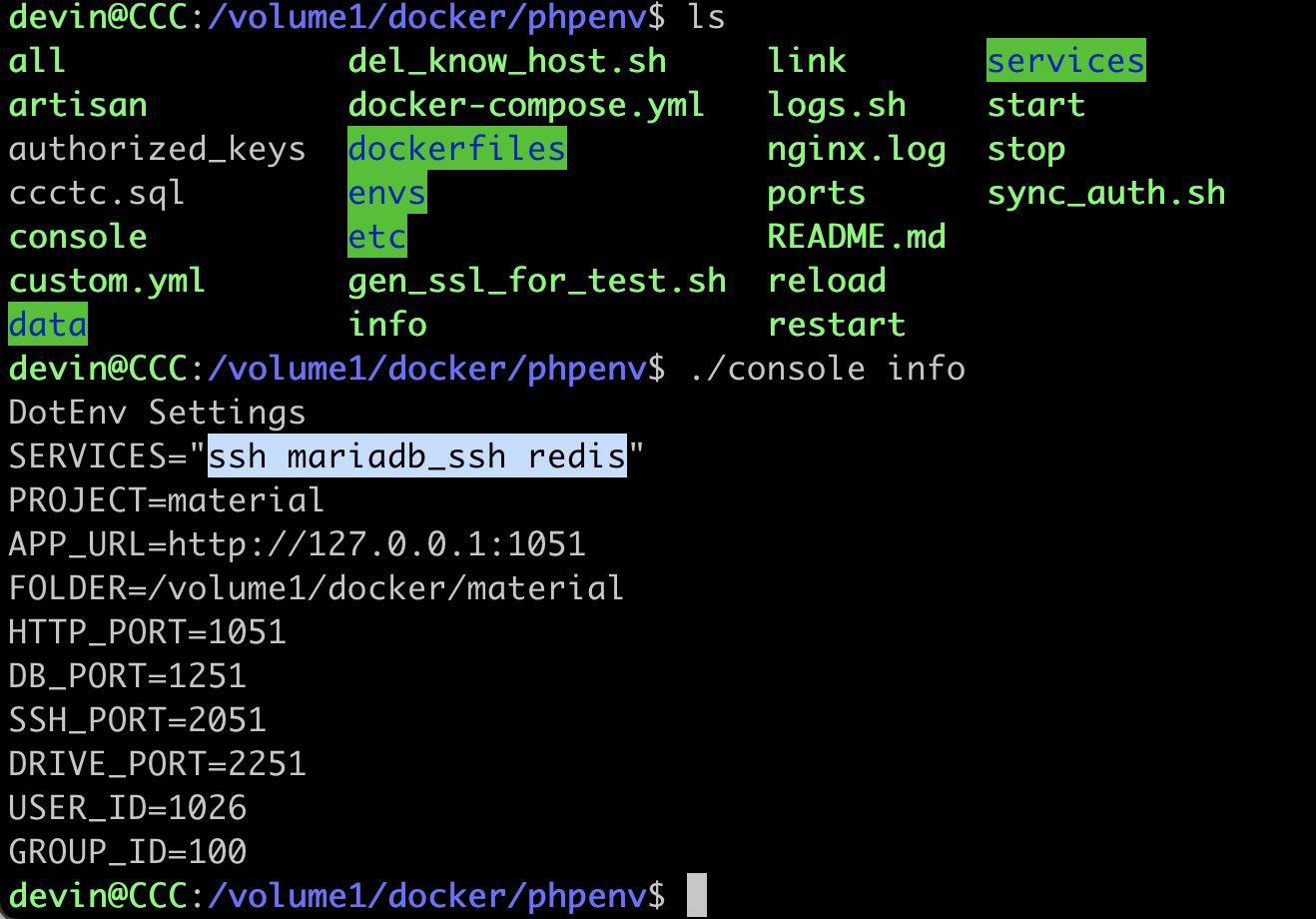

Assume that in an environment that does not automatically switch subnets when full, take deviny/phpenv's docekr-compose environment as an example, the default web and php in

.env, plus an additional three ssh, mariadb_ssh and redis.

You can think of the achievements as different services in docker-compose.

It’s amazing that there are only five services, and in this setting, my maraidb service is still hung in the ssh service.

In this environment, For four services, building a private segment subnet of class b is a waste of IP, and no cluster is built.

If unfortunately your Docker environment encounters the problem I mentioned, here is my solution for reference:

How to solve

One trick: Delete the network that is not in use, please pay attention to the prompt message instructions, you can try it if you need it urgently, but I think this is a temporary solution, not the root cause, maybe there is no unused network that can be deleted, Or it's almost full.

docker network prune

Trick 2: Manually specify the network

1. Build the network first, then start the container. The manually built network will not be automatically removed when docker-compose is disabled. At least that's how it is in my environment.

docker network create demo_dlaravel_net --subnet 10.99.0.0/24

Take deviny/phpenv as an example. His Project is demo, so there will be a demo_dlaravel_net network, so in the above command, my network name is demo_dlaravel_net.

Please set according to the folder name and network name in your own docker-compose.yml.

deviny/ phpenv is not used by everyone, here is my custom.yml for reference, maybe you will be more interested

version: '3.6'

services:

#=== web service ======================

web:

build:

context: ./dockerfiles

dockerfile: Dockerfile-nginx

args:

USER_ID: ${USER_ID-1000}

GROUP_ID: ${GROUP_ID-1000}

image: ${PROJECT}_nginx

dns: 8.8.8.8

depends_on:

- php

ports:

- ${HTTP_PORT-1050}:80

#- ${HTTPS_PORT-1250}:443

volumes:

- ${FOLDER-./project}:/var/www/html

- ./etc:/etc/nginx/conf.d

networks:

- dlaravel_net

#=== php service ==========================

php:

build:

context: ./dockerfiles

dockerfile: Dockerfile-php-7.4-${CPU-x86_64}

args:

USER_ID: ${USER_ID-1000}

GROUP_ID: ${GROUP_ID-1000}

image: ${PROJECT}_php

volumes:

- ./etc/php:/usr/local/etc/php/conf.d

- ${FOLDER-./project}:/var/www/html

- ./etc/php-fpm.d/www.conf:/usr/local/etc/php-fpm.d/www.conf

- ./etc/cache:/home/dlaravel/.composer/cache

- ./etc/supervisor:/etc/supervisor/conf.d

environment:

- TZ=Asia/Taipei

- project=${HOST-localhost}

command: ["sudo", "/usr/bin/supervisord"]

networks:

dlaravel_net:

#=== top-level dlaravel_netowks key ======================

networks:

dlaravel_net:

Third method: This is a great deal, directly adjust daemon.json, but this requires restarting docker.

Linux environment is in

/etc/docker/daemon.json

MacOS environment is in

~/.docker/daemon.json

For example: like me , add a bunch of subnets to the Linux host

"default-address-pools": [

{"base":"172.17.1.0/24","size":24},

{"base":"172.17.2.0/24","size":24},

{"base":"172.17.3.0/24","size":24},

{"base":"172.17.4.0/24","size":24},

{"base":"172.17.5.0/24","size":24},

{"base":"172.17.6.0/24","size":24},

{"base":"172.17.7.0/24","size":24},

{"base":"172.17.8.0/24","size":24},

{"base":"172.17.9.0/24","size":24},

{"base":"172.17.10.0/24","size":24},

{"base":"172.17.11.0/24","size":24},

{"base":"172.17.12.0/24","size":24},

{"base":"172.17.13.0/24","size":24},

{"base":"172.17.14.0/24","size":24},

{"base":"172.17.15.0/24","size":24},

{"base":"172.17.16.0/24","size":24},

{"base":"172.17.17.0/24","size":24},

{"base":"172.17.18.0/24","size":24},

{"base":"172.17.19.0/24","size":24},

{"base":"172.17.20.0/24","size":24},

{"base":"172.17.21.0/24","size":24},

{"base":"172.17.22.0/24","size":24},

{"base":"172.17.23.0/24","size":24},

{"base":"172.17.24.0/24","size":24},

{"base":"172.17.25.0/24","size":24},

{"base":"172.17.26.0/24","size":24},

{"base":"172.17.27.0/24","size":24},

{"base":"172.17.28.0/24","size":24},

{"base":"172.17.29.0/24","size":24},

{"base":"172.17.30.0/24","size":24},

{"base":"172.17.31.0/24","size":24},

{"base":"172.17.32.0/24","size":24},

{"base":"172.17.33.0/24","size":24},

{"base":"172.17.34.0/24","size":24},

{"base":"172.17.35.0/24","size":24},

{"base":"172.17.36.0/24","size":24},

{"base":"172.17.37.0/24","size":24},

{"base":"172.17.38.0/24","size":24},

{"base":"172.17.39.0/24","size":24},

{"base":"172.17.40.0/24","size":24}

]If you use the command to check again, there are many subnet masks that have become 24, and nerver be run out any more them all😆.

$ docker inspect $(docker network ls|tail -n+2|awk '{print $1}') -f "{{.IPAM}}"|sort

{default map[] []}

{default map[] []}

{default map[] [{10.99.33.0/24 10.99.33.1 map[]}]}

{default map[] [{172.17.10.0/24 172.17.10.1 map[]}]}

{default map[] [{172.17.1.0/24 map[]}]}

{default map[] [{172.17.12.0/24 172.17.12.1 map[]}]}

{default map[] [{172.17.16.0/24 172.17.16.1 map[]}]}

{default map[] [{172.17.2.0/24 172.17.2.1 map[]}]}

{default map[] [{172.17.21.0/24 172.17.21.1 map[]}]}

{default map[] [{172.17.25.0/24 172.17.25.1 map[]}]}

{default map[] [{172.17.4.0/24 172.17.4.1 map[]}]}

{default map[] [{172.17.6.0/24 172.17.6.1 map[]}]}

{default map[] [{172.17.7.0/24 172.17.7.1 map[]}]}

{default map[] [{172.17.8.0/24 172.17.8.1 map[]}]}

{default map[] [{172.17.9.0/24 172.17.9.1 map[]}]}

{default map[] [{172.18.0.0/16 172.18.0.1 map[]}]}

{default map[] [{172.25.0.0/16 172.25.0.1 map[]}]}

No Comment

Post your comment